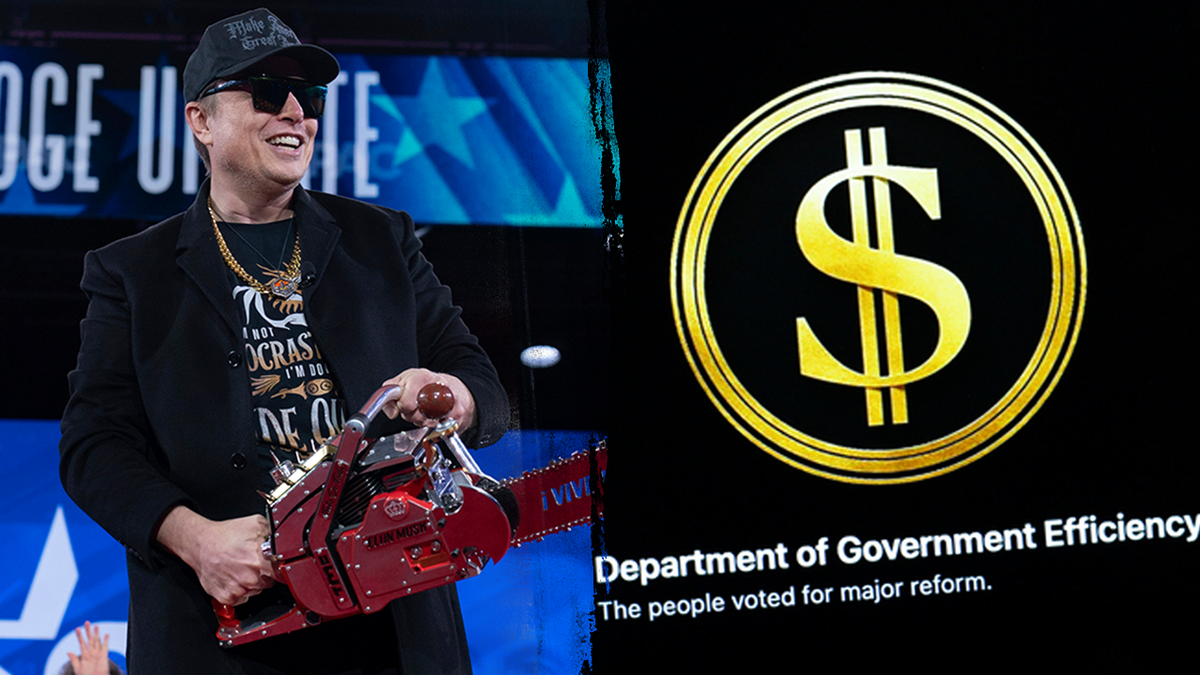

Our future relies heavily on technology, and we’re gradually becoming more dependent on Artificial Intelligence. AI tools like Grok, ChatGPT, and Claude can do much more than chat about the universe. They can help hire the right candidate for a specific job, format a letter correctly, organize an article, create citations, and handle many other tasks. They can also generate tables and pull data from X posts or websites. But we need to pause and think. While we want AI to remain fair and unbiased, there’s a risk we can’t ignore—a danger bigger than anything Kenny Loggins or Archer could dream up. Let’s explore why Grok’s misstep reply on Tax Day, April 15, 2025 sparked debate, prompting us to examine whether we can fully trust AI—especially when fears of systems like Grok going “woke” or succumbing to external pressures loom large.

AI and the Information Landscape on X

Grok recently stirred discussion by flagging certain X accounts, such as @ksorbs, for sharing questionable content related to vaccines and elections. Some users praised the move as a step toward accountability, while others criticized it as overreach, fearing it could unfairly target specific viewpoints. The debate reflects broader concerns about misinformation on X, where reduced moderation has allowed both accurate and misleading posts to gain traction. A 2024 UCI study highlights how polarized narratives—across political spectrum—can amplify divisive content. Meanwhile, a YouGov survey shows 70% of Democrats and 35% of Republicans view misinformation as a serious issue, revealing differing priorities that AI must navigate carefully.

The fear that Grok, like ChatGPT, could lean toward one ideology—especially with significant financial investments pouring into AI—has sparked skepticism. ChatGPT’s perceived shift toward “woke” outputs has raised alarms, but Grok’s developers at xAI emphasize their commitment to neutrality. Still, can AI fairly moderate content on platforms like X without amplifying one side’s narrative? Just as we questioned YouTube comments in the 2000s, we must critically evaluate AI’s role in shaping what we see online.

Transparency and Trust in AI Development

Grok, still early in its development, relies on vast internet datasets, which can introduce biases. xAI’s limited transparency about its processes fuels concerns about whether developers—potentially influenced by external pressures or financial incentives—could steer Grok’s outputs. Caltech’s Yisong Yue points out that unclear AI instructions can lead to unintended biases, a risk for any platform, including Grok. While xAI aims to prioritize truth, the broader AI industry, including heavily funded systems like ChatGPT, faces scrutiny over whether corporate interests could compromise neutrality. Ensuring Grok remains a tool for open inquiry, not a gatekeeper, requires ongoing oversight and clear communication from xAI.

AI’s Risks: Hacking and Deepfakes

Beyond bias, AI faces technical and ethical challenges. Caltech’s Anima Anandkumar warns that AI can misinterpret critical data—mistaking a stop sign for a speed limit sign, for example. AI lacks human judgment, making it a tool to use cautiously, not blindly trust. Deepfakes further complicate the landscape, as seen in Spain’s Almendralejo case, where AI-generated fakes led to real-world harm, per the TAKE IT DOWN Act. Such incidents erode trust and highlight the need for platforms to swiftly address malicious content. Grok and other AI systems must balance innovation with responsibility to avoid undermining societal trust.

Bias and the Balance of Freedom

AI systems, including Grok, can inadvertently perpetuate biases—favoring certain demographics in job ads or reflecting skewed healthcare data, as Paulo Carvão observes. A 2023 FIRE report notes that 72% of conservative faculty self-censor, potentially skewing academic inputs, while a 2024 Nature study argues that content moderation often targets misinformation, not ideology. Both sides raise valid points: unchecked bias risks unfair outcomes, but heavy-handed moderation could stifle open discourse. AI must align with principles of free expression to avoid becoming a tool of control. Grok’s challenge is to navigate these tensions while remaining impartial, especially as fears persist that AI could tilt toward one ideology, as some claim ChatGPT has done.

Can Grok Serve Humanity?

Could Grok become a force for good, like a digital ally for humanity? xAI is refining its algorithms to improve accuracy, but challenges remain. A Readly study notes declining fact-checking in newsrooms, making reliable sourcing harder. Solutions like media literacy and platform accountability are critical but underutilized. AI offers immense potential—assisting doctors or analyzing data, as Cara Mello with Patriot Newswire highlights—but it also risks overreach, from surveillance to job displacement. The key is fostering critical thinking to ensure AI supports open dialogue, not division.

Grok, ChatGPT, and others are under pressure to prove they can remain unbiased despite massive investments. xAI’s commitment to neutrality is promising, but public trust hinges on transparency and accountability. As we shape AI’s future, the question isn’t just about technology—it’s about how we balance innovation with fairness.

Like this article?

☕️ Share a coffee: https://buymeacoffee.com/criordan

👉 Follow me on X: @CRiordan2024

Click HERE to read more from Clara Dorrian.

Very good